Local Large Language Models & How to Use Domestic AIs Compatible with OpenAI ChatGPT API

In video translation and dubbing software, AI large language models can serve as efficient translation channels, significantly improving translation quality by considering the context.

Currently, most domestic AI interfaces are compatible with OpenAI technology, allowing users to operate directly within OpenAI ChatGPT or local large language models. You can also deploy and use ollama locally.

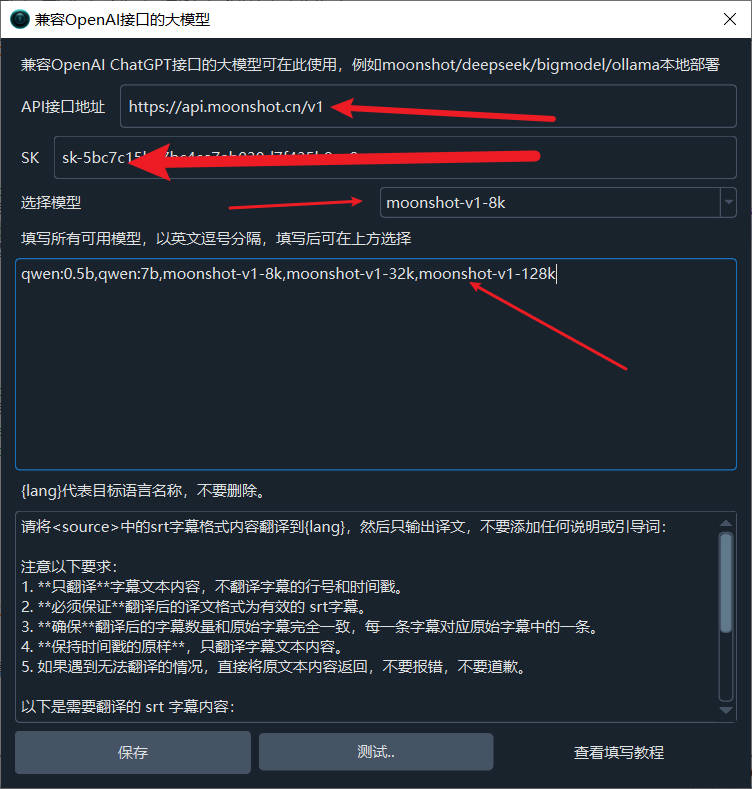

Moonshot AI Usage

- Menu bar -- Translation Settings -- OpenAI ChatGPT API Settings interface

- Enter

https://api.moonshot.cn/v1in the API interface address. - Fill in the

API Keyobtained from the Moonshot open platform in the SK field. You can obtain it from this website: https://platform.moonshot.cn/console/api-keys - Enter

moonshot-v1-8k,moonshot-v1-32k,moonshot-v1-128kin the model text box area. - Then, select the model you want to use in the model selection and keep it after testing without any problems.

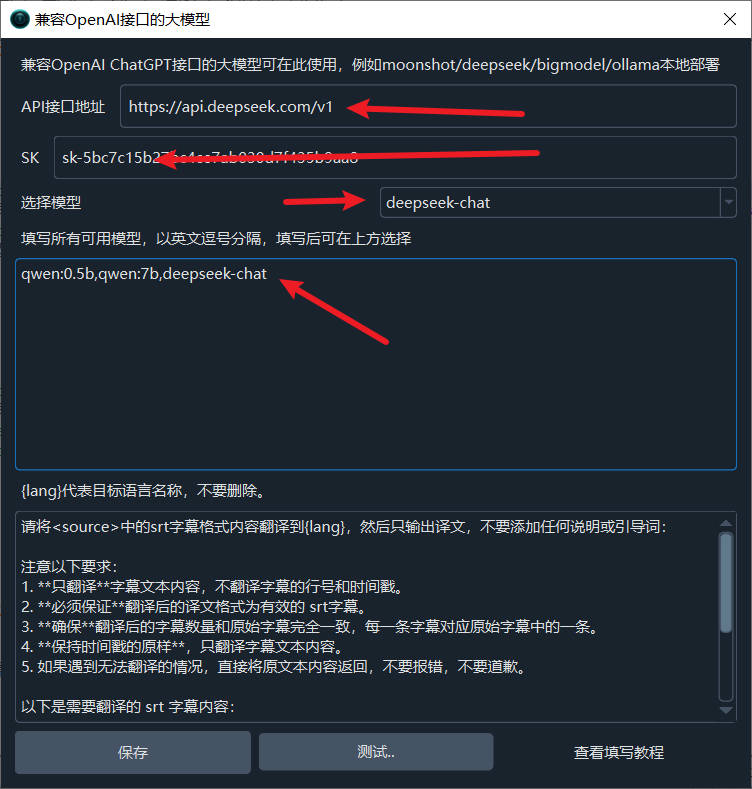

Deepseek AI Usage

- Menu bar -- Translation Settings -- OpenAI ChatGPT API Settings interface

- Enter

https://api.deepseek.com/v1in the API interface address. - Fill in the

API Keyobtained from the Moonshot open platform in the SK field. You can obtain it from this website: https://platform.deepseek.com/api_keys - Enter

deepseek-chatin the model text box area. - Then, select

deepseek-chatin the model selection and keep it after testing without any problems.

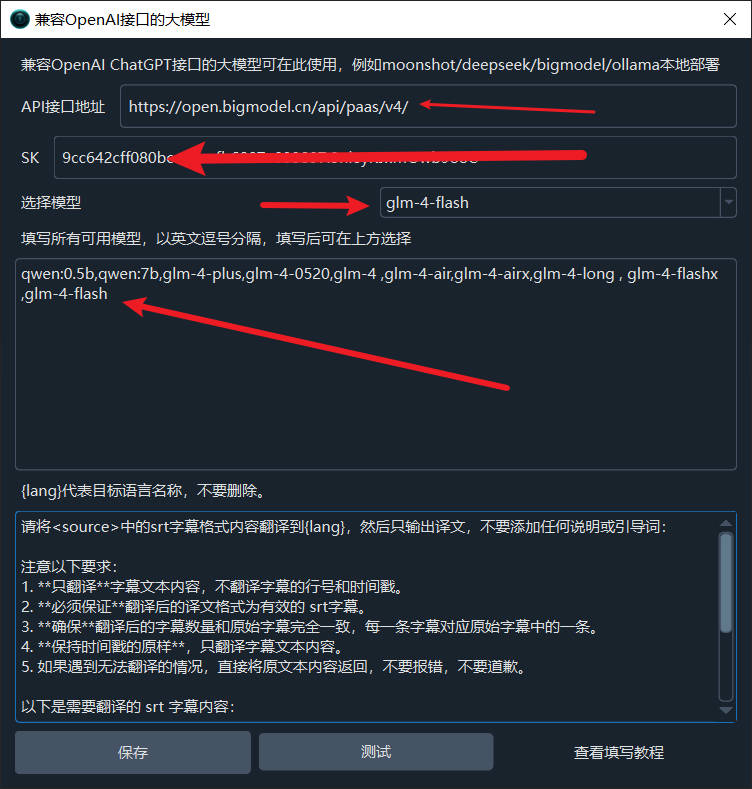

Zhipu AI BigModel Usage

- Menu bar -- Translation Settings -- OpenAI ChatGPT API Settings interface

- Enter

https://open.bigmodel.cn/api/paas/v4/in the API interface address. - Fill in the

API Keyobtained from the Moonshot open platform in the SK field. You can obtain it from this website: https://www.bigmodel.cn/usercenter/apikeys - Enter

glm-4-plus,glm-4-0520,glm-4 ,glm-4-air,glm-4-airx,glm-4-long , glm-4-flashx ,glm-4-flashin the model text box area. - Then, select the model you want to use in the model selection. You can select the free model

glm-4-flashand keep it after testing without any problems.

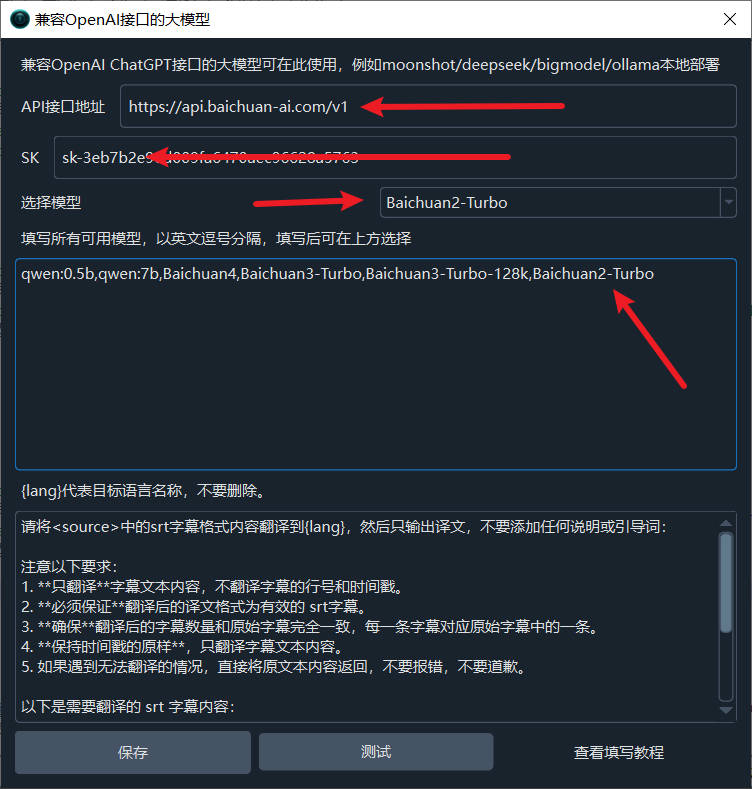

Baichuan AI Usage

- Menu bar -- Translation Settings -- OpenAI ChatGPT API Settings interface

- Enter

https://api.baichuan-ai.com/v1in the API interface address. - Fill in the

API Keyobtained from the Moonshot open platform in the SK field. You can obtain it from this website: https://platform.baichuan-ai.com/console/apikey - Enter

Baichuan4,Baichuan3-Turbo,Baichuan3-Turbo-128k,Baichuan2-Turboin the model text box area. - Then, select the model you want to use in the model selection and keep it after testing without any problems.

01.AI (Lingyiwanwu)

Website: https://lingyiwanwu.com

API KEY Acquisition Address: https://platform.lingyiwanwu.com/apikeys

API URL: https://api.lingyiwanwu.com/v1

Available Models: yi-lightning

Alibaba Bailian

Alibaba Bailian is an AI model marketplace that provides all Alibaba-related models and other manufacturer models, including Deepseek-r1.

Official Website: https://bailian.console.aliyun.com

API KEY (SK) Acquisition Address: https://bailian.console.aliyun.com/?apiKey=1#/api-key

API URL: https://dashscope.aliyuncs.com/compatible-mode/v1

Available Models: Many, see details at https://bailian.console.aliyun.com/#/model-market

Silicon Flow

Another AI marketplace similar to Alibaba Bailian, providing mainstream domestic models, including deepseek-r1.

Official Website: https://siliconflow.cn

API KEY (SK) Acquisition Address: https://cloud.siliconflow.cn/account/ak

API URL: https://api.siliconflow.cn/v1

Available Models: Many, see details at https://cloud.siliconflow.cn/models?types=chat

Note: Silicon Flow provides the Qwen/Qwen2.5-7B-Instruct free model, which can be used directly without any cost.

ByteDance Volcano Engine Ark

An AI marketplace similar to Alibaba Bailian, in addition to the Doubao series models, there are also some third-party models, including deepseek-r1.

Official Website: https://www.volcengine.com/product/ark

API KEY (SK) Acquisition Address: https://console.volcengine.com/ark/region:ark+cn-beijing/apiKey

API URL: https://ark.cn-beijing.volces.com/api/v3

MODELS: Many, see details at https://console.volcengine.com/ark/region:ark+cn-beijing/model?vendor=Bytedance&view=LIST_VIEW

Note: ByteDance Volcano Engine Ark has a somewhat strange compatibility with the OpenAI SDK. You cannot directly fill in the model name. You need to create an inference endpoint in the Volcano Engine Ark console in advance, select the model to use in the inference endpoint, and then fill in the inference endpoint ID in the place where the model is needed, i.e., in the software. If you find it troublesome, you can ignore it, as it has no other advantages besides a slightly lower price. See how to create an inference endpoint: https://www.volcengine.com/docs/82379/1099522

Precautions:

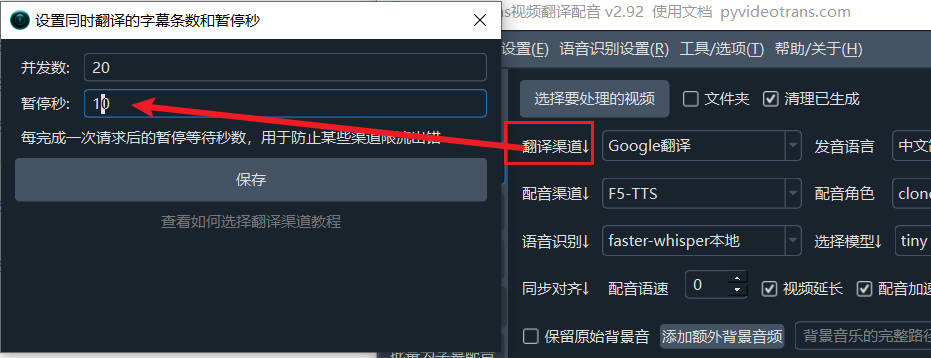

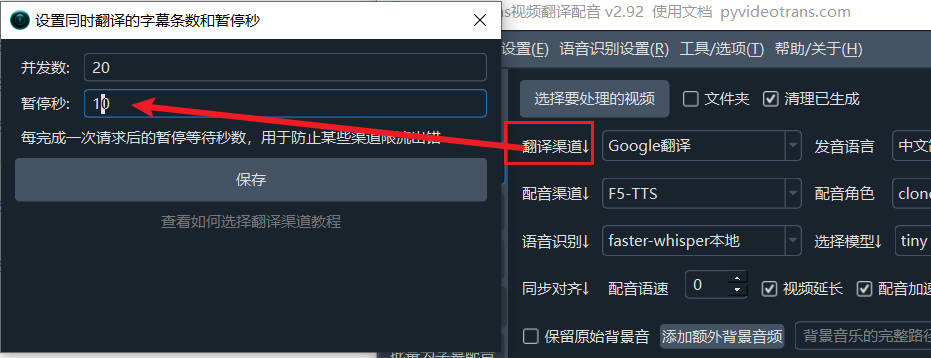

Most AI translation channels may limit the number of requests per minute. If an error message indicates that the request frequency is exceeded, you can click "Translation Channel↓" on the main interface of the software, and change the pause seconds to 10 in the pop-up window, that is, wait 10 seconds after each translation before initiating the next translation request, up to 6 times per minute, to prevent the frequency from being exceeded.

If the selected model is not intelligent enough, especially if the locally deployed model is limited by hardware resources and is usually small, it may not be able to accurately return translations that meet the required format according to the instructions, and there may be too many blank lines in the translation results. In this case, you can try using a larger model, or open Menu -- Tools/Options -- Advanced Options -- Send complete subtitles content when using AI translation, and uncheck it.

Use ollama to locally deploy the Tongyi Qianwen large language model

If you have some hands-on skills, you can also deploy a large language model locally and then use the model for translation. Take Tongyi Qianwen as an example to introduce the deployment and usage methods.

1. Download the exe and run it successfully

Open the website https://ollama.com/download

Click to download. After the download is complete, double-click to open the installation interface, click Install to complete.

After completion, a black or blue window will automatically pop up. Enter the three words ollama run qwen and press Enter. The Tongyi Qianwen model will be downloaded automatically.

Wait for the model to finish downloading. No proxy is required and the speed is quite fast.

After the model is automatically downloaded, it will run directly. When the progress reaches 100% and the "Success" character is displayed, it means that the model has been run successfully. This also means that the installation and deployment of the Tongyi Qianwen large language model has been fully completed and you can use it happily. Isn't it super simple?

The default interface address is http://localhost:11434

If the window is closed, how to open it again? It is also very simple. Open the computer's start menu, find "Command Prompt" or "Windows PowerShell" (or directly enter

Win key + q keyto search for cmd), click to open, and enterollama run qwento complete.

2. Use directly in the console command window

As shown in the figure, when this interface is displayed, you can actually enter text directly in the window to start using it.

3. Of course, this interface may not be very friendly, so let's get a friendly UI

Open the website https://chatboxai.app/zh and click Download

After downloading, double-click and wait for the interface window to open automatically.

Click "Start Settings", in the pop-up floating layer, click the top model, select "Ollama" in the AI model provider, fill in the address http://localhost:11434 in the API domain name, select Qwen:latest in the model drop-down menu, and then save it.

The usage interface displayed after saving, use your imagination and use it at will.

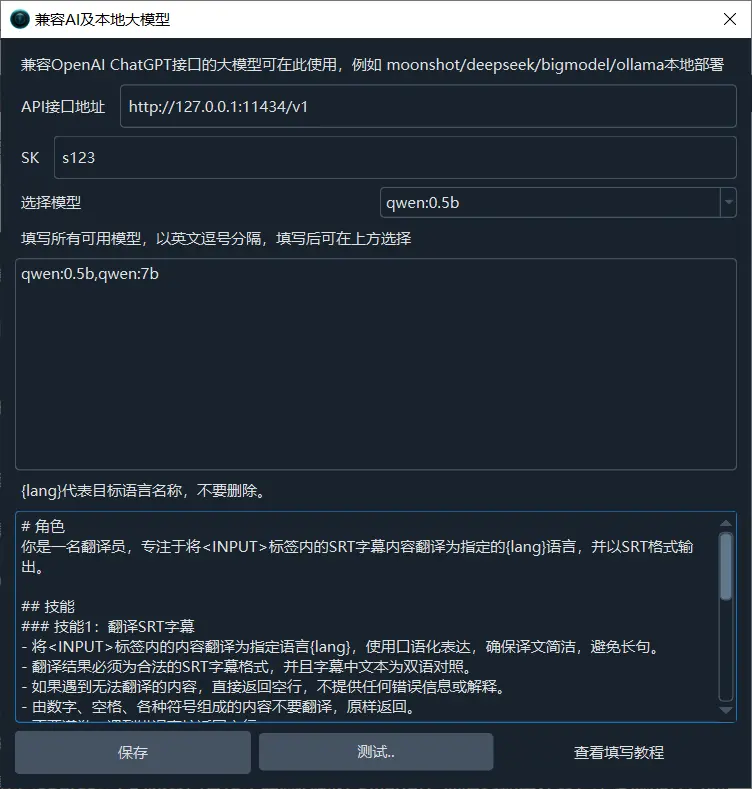

4. Fill in the API in the video translation and dubbing software

Open Menu -- Settings -- Compatible with OpenAI and local large language models, add a model

,qwenin the middle text box, as shown below after adding, and then select the model

Fill in

http://localhost:11434/v1in the API URL, and fill in the SK arbitrarily, such as 1234

Test whether it is successful, save it if it is successful, and go to use it

5. What other models can be used

In addition to Tongyi Qianwen, there are many other models that can be used, and the usage method is as simple, just 3 words ollama run model name

Open this address https://ollama.com/library You can see all the model names. Copy the name you want to use, and then execute ollama run model name.

Remember how to open the command window? Click the start menu and find Command Prompt or Windows PowerShell

For example, I want to install the openchat model

Open Command Prompt, enter ollama run openchat, press Enter and wait until Success is displayed.

Precautions:

Most AI translation channels may limit the number of requests per minute. If an error message indicates that the request frequency is exceeded, you can click "Translation Channel↓" on the main interface of the software, and change the pause seconds to 10 in the pop-up window, that is, wait 10 seconds after each translation before initiating the next translation request, up to 6 times per minute, to prevent the frequency from being exceeded.

If the selected model is not intelligent enough, especially if the locally deployed model is limited by hardware resources and is usually small, it may not be able to accurately return translations that meet the required format according to the instructions, and there may be too many blank lines in the translation results. In this case, you can try using a larger model, or open Menu -- Tools/Options -- Advanced Options -- Send complete subtitles content when using AI translation, and uncheck it.